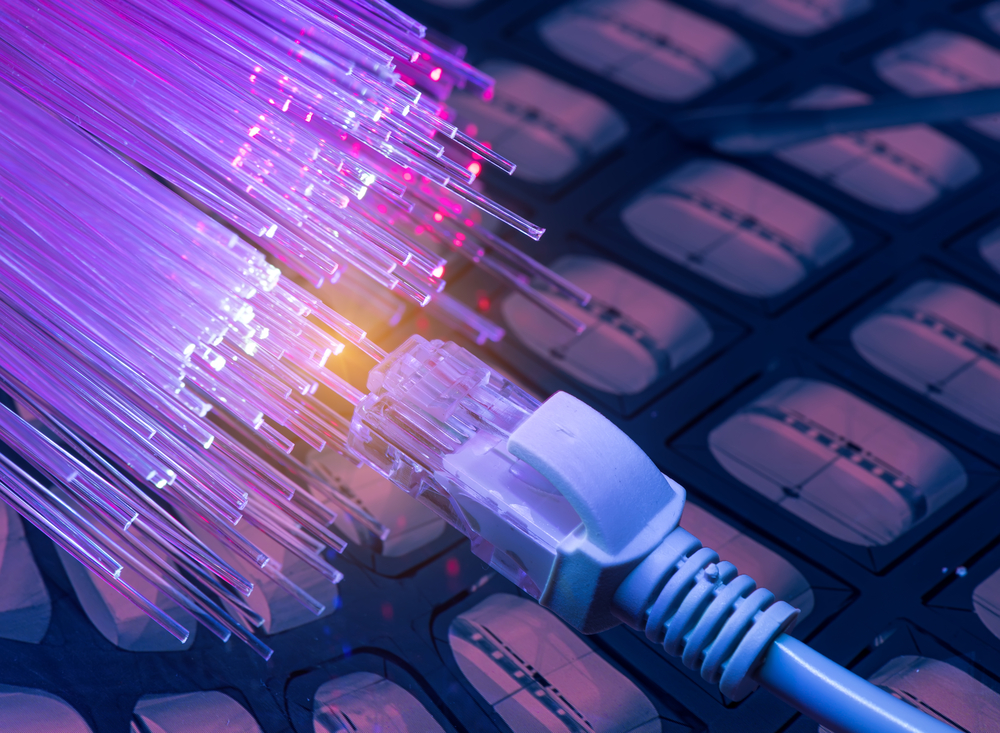

The Hidden World of Network Protocol Optimization

In the vast landscape of modern telecommunications, network protocol optimization remains one of the most crucial yet understated elements driving our digital experiences. While consumers focus on bandwidth figures and connection speeds, engineers worldwide are engaged in the intricate science of fine-tuning how data actually flows through networks. This optimization process impacts everything from the responsiveness of mobile applications to the reliability of video calls and the efficiency of large-scale data transfers. The difference between a seamlessly functioning network and one plagued with delays often lies in protocol-level adjustments invisible to end users.

Understanding Network Protocols: The Digital Rulebook

Network protocols serve as the foundational rulebooks governing how data travels between devices. These protocols define everything from how connections are established to how data packets are formatted, transmitted, verified, and terminated. The Transmission Control Protocol/Internet Protocol (TCP/IP) suite forms the backbone of most internet communications today, but it’s just one family among dozens of specialized protocols. Each protocol layer addresses different aspects of the communication process – physical transmission, addressing, routing, session management, and application-specific requirements. Understanding these layers is essential for meaningful optimization, as inefficiencies at one level can cascade throughout the entire communication chain.

The development of these protocols wasn’t a straightforward process. Early ARPANET transmissions in the 1960s and 70s revealed fundamental challenges in establishing reliable communications across unreliable networks. Engineers like Vint Cerf and Bob Kahn developed TCP/IP specifically to address these reliability concerns, creating a robust system that could route around damage and verify data integrity. This historical context explains why many protocols prioritize reliability over speed – a trade-off that modern optimization techniques now seek to balance differently.

Protocol Overhead: The Invisible Bandwidth Consumer

One of the most significant challenges in network efficiency involves protocol overhead – the extra data transmitted solely to manage connections rather than carry actual content. This overhead can consume surprisingly large portions of available bandwidth. For example, in traditional TCP communications, the “three-way handshake” establishes connections through multiple back-and-forth transmissions before any actual data transfers. Header information attached to each packet further reduces efficiency, sometimes accounting for 20-40 bytes per packet – negligible for large transfers but potentially doubling bandwidth requirements for small, frequent transmissions like IoT sensor readings or real-time gaming data.

Modern protocol optimization techniques address this overhead through various approaches. Header compression protocols like ROHC (Robust Header Compression) can reduce TCP/IP headers from 40 bytes to just 1-3 bytes in many cases. Connection multiplexing, implemented in protocols like HTTP/2, allows multiple data streams to share a single connection, eliminating redundant handshakes. Fast-open technologies permit data transmission during the connection establishment phase rather than waiting for the complete handshake. Together, these techniques can dramatically reduce latency and improve bandwidth utilization, particularly for applications requiring many small, frequent data exchanges.

Congestion Control: Traffic Management for the Information Superhighway

Network congestion remains one of the most persistent challenges in telecommunications. When too many data packets attempt to traverse the same network segments simultaneously, buffer overflows occur, leading to packet loss, retransmissions, and spiraling performance degradation. Traditional congestion control mechanisms like TCP’s slow-start and congestion avoidance algorithms have served the internet well for decades but were designed for networks with fundamentally different characteristics than today’s high-speed, wireless-heavy infrastructure.

Next-generation congestion control algorithms represent one of the most active areas in protocol optimization research. Google’s BBR (Bottleneck Bandwidth and Round-trip time) algorithm has demonstrated remarkable efficiency improvements by modeling network capacity rather than relying solely on packet loss as a congestion signal. Similarly, delay-based algorithms like TCP Vegas and FAST TCP use increasing round-trip times as early warning signs of congestion, allowing more proactive throttling before packet loss occurs. These innovations are particularly important for video streaming, cloud gaming, and other latency-sensitive applications where traditional loss-based congestion control creates visible performance issues. Implementation challenges remain, however, as networks containing mixed algorithm types can sometimes interact in unpredictable ways.

Protocol Adaptation for Wireless Networks

The explosive growth of mobile connectivity has exposed fundamental limitations in protocols originally designed for wired networks. Wireless connections face unique challenges including signal interference, mobility handovers, fluctuating bandwidth, and higher error rates. Traditional TCP interprets packet loss as congestion, triggering counterproductive slowdowns when the actual issue is temporary radio interference. This misdiagnosis can reduce throughput by 50% or more in otherwise capable wireless networks.

Specialized wireless-aware protocols have emerged to address these limitations. TCP Westwood+ and TCP CUBIC modify standard congestion control to better distinguish between congestion-related and wireless-related packet loss. Mobile-optimized tunneling protocols implement intelligent packet retransmission that occurs at the wireless edge rather than requiring end-to-end retransmission. Cross-layer optimization techniques allow applications to receive signals from lower network layers about changing wireless conditions, enabling adaptive behaviors like dynamic video quality adjustment or transmission pausing during handovers between cell towers. These adaptations have become increasingly important as mobile data consumption continues its exponential growth trajectory, with users expecting consistent performance regardless of their connection method.

Real-Time Protocol Optimization: The Quest for Minimal Latency

Perhaps the most demanding frontier in protocol optimization involves supporting real-time applications where even milliseconds matter. Video conferencing, online gaming, financial trading, and industrial automation all require minimal latency to function properly. Traditional protocols that prioritize reliability over speed often introduce unacceptable delays for these use cases, leading to the development of specialized real-time protocols that make different trade-offs.

The User Datagram Protocol (UDP) forms the foundation for many real-time applications by eliminating connection establishment and guaranteed delivery mechanisms that introduce delays. Building on UDP, protocols like RTP (Real-time Transport Protocol) add timing information and sequence numbering without enforcing strict reliability. WebRTC technologies incorporate adaptive jitter buffering and selective retransmission to maintain quality while minimizing delay. Perhaps most remarkably, specialized gaming-oriented protocols can now prioritize the most time-sensitive data packets (like player position updates) while using available bandwidth more efficiently for less time-critical information (like environment details). These advances haven’t just improved existing applications but have enabled entirely new categories of real-time services that would have been technically impossible under older protocol paradigms.

The Future of Network Protocol Optimization

As we look toward the future, protocol optimization faces both challenges and opportunities. The transition to encrypted communications has restricted visibility into packet contents, limiting some optimization techniques but spurring innovation in metadata-based approaches. Machine learning algorithms are increasingly being deployed to predict network conditions and proactively adjust protocol parameters, moving beyond traditional static rule sets. Protocol-aware hardware acceleration is making higher-performance optimization possible at network scale rather than just at endpoints.

The most promising frontier may be intent-based networking, where protocols adapt based on the specific requirements of applications rather than applying one-size-fits-all transmission rules. This approach could eventually create self-optimizing networks where protocols dynamically reconfigure themselves based on real-time conditions and application needs. While significant technical barriers remain, the economic incentives for continued innovation are substantial as network optimization directly impacts both user experience and infrastructure costs. As telecommunications continues its evolution, protocol optimization will remain an essential discipline driving performance improvements even as raw bandwidth continues to increase.